Exploring Android Heap allocations in jemalloc 'new'

Vous souhaitez améliorer vos compétences ? Découvrez nos sessions de formation ! En savoir plus

Introduction

Jemalloc 'new', jemalloc >= 5, is a libc heap allocator introduced in Android 10. While sharing the same name with jemalloc and some features, its backend is very different. There is not much public research on the subject1. Moreover, despite the introduction of the scudo allocator in Android 11, a lot of devices are still using jemalloc 'new', Samsung Galaxy S23 included. For all these reasons, a post on the subject seems relevant.

The current article will focus mainly on the Android implementation2 (b0dbd53 commit as of writing) corresponding to the 5.1.0 jemalloc branch (with custom changes).

This blogpost will describe the main concepts of jemalloc 'new' and differences with jemalloc 'old'. Jemalloc version >= 5 will be referred as jemalloc new whereas jemalloc old will refer to jemalloc version < 5.

How to know if a device uses jemalloc 'new' ?

Android versions are known to be very fragmented across devices. But even if two devices share the same Android version, they could have a different libc allocator. For instance, Google Pixel 4a 5G on Android 13 uses scudo as its allocator whereas Samsung Galaxy S23 uses jemalloc 'new' instead.

The easiest way to find the allocator in use is to look at the symbols in the libc. Look for symbols starting with je_. If hundreds are found, it should be jemalloc old or new. If the Android version is fairly new, it is mostly jemalloc 'new'.

$ readelf -s libc.so | grep je_ | wc -l

292

To be sure, look for symbols containing extent in them, jemalloc 'old' will return only around 10 results and jemalloc 'new' around 100.

$ readelf -s libc.so | grep extent | wc -l

81

Overview

Jemalloc 'new' uses several important objects:

- arena (

arena_t): main structure keeping track of allocations - extent (

extent_t): virtual memory segment - region: small allocation

- slab: an extent containing regions of the same size, the underlying structures is an

extent_t - bin (

bin_t): heap of extents/slab of a specific size class - tcache (

tcache_t): cache of the most recent freed allocations for a given thread

As opposed to scudo or dlmalloc, metadata are not inlined before the address returned by malloc(), they are stored in a totally different place in memory.

Another noteworthy feature is the lack of memory unmapping. By default mapped extents are kept until the end of the process (except if the opt_retain option is set to false, the default is true).

Main structures

Arena

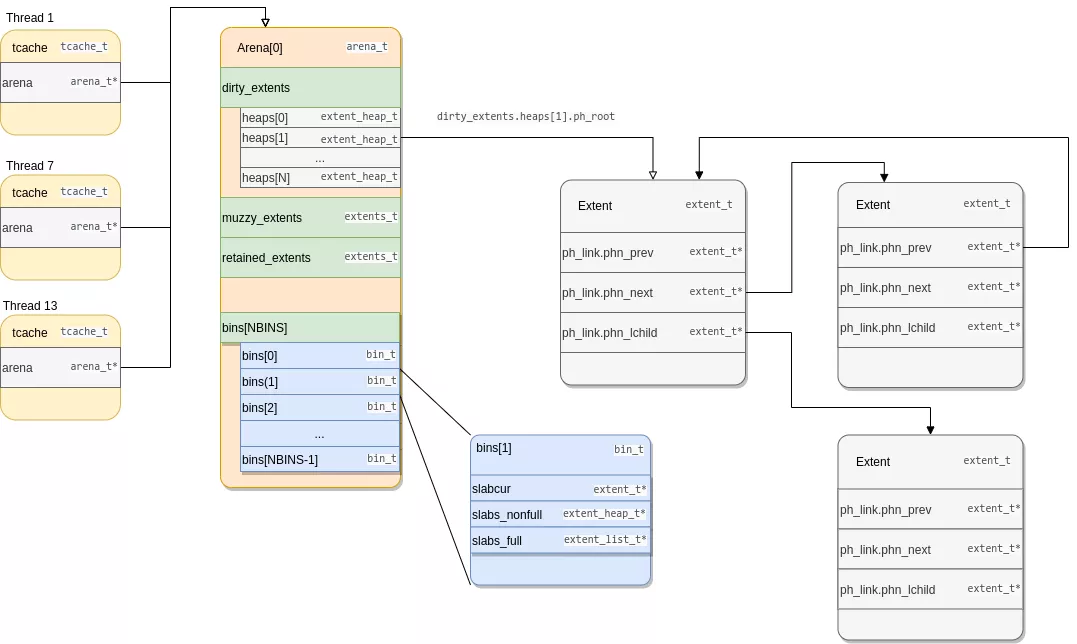

Arena structures manage parts of the memory independently to reduce concurrency locks. On Android, only 2 arenas are used. Each thread is assigned to one of these two arenas. As such, reusing an allocation made by one thread from another (for instance to exploit a use-after-free), requires the two threads to share the same arena. Its structure struct arena_s/arena_t is available in include/jemalloc/internal/arena_structs_b.h.

arenas maintain lists of allocated/free/cached chunks of memory. These basic chunks are called extents on jemalloc 'new'. They can be used as a whole for large allocations or split into smaller regions.

Available extents (i.e. free) are spread across 3 heaps:

dirty: extents newly freedmuzzy: extents decayed from dirtyretained: extents decayed from muzzy

These heaps store extents from most recently freed (dirty) to least recently freed (retained). Extents transition from a heap to the next one when a decay occurs.

Note: if the global variable

opt_retainis set to 1 (default case), when a decay occurs in the retained heap, the extents stay there and are never unmapped. So by default, the mapped extents are never unmapped from the process memory.

// include/jemalloc/internal/arena_structs_b.h

struct arena_s {

// [...]

/*

* Collections of extents that were previously allocated. These are

* used when allocating extents, in an attempt to re-use address space.

*

* Synchronization: internal.

*/

extents_t extents_dirty;

extents_t extents_muzzy;

extents_t extents_retained;

// [...]

}

The extents_t structure keeps track of free extents per size class in its heaps. The full list of extents can also be accessed through the lru field which is a simple double linked list. The bitmap field is used to determine which heaps are non-empty.

// include/jemalloc/internal/extent_structs.h

struct extents_s {

// [...]

/*

* Quantized per size class heaps of extents.

*

* Synchronization: mtx.

*/

extent_heap_t heaps[NPSIZES+1];

/*

* Bitmap for which set bits correspond to non-empty heaps.

*

* Synchronization: mtx.

*/

bitmap_t bitmap[BITMAP_GROUPS(NPSIZES+1)];

/*

* LRU of all extents in heaps.

*

* Synchronization: mtx.

*/

extent_list_t lru;

// [...]

};

Arenas also keep track of slabs with free regions (small allocations) in heaps ordered by size class (bins). On Android 64-bit processes, the number of small size classes (NBINS) is 36.

// include/jemalloc/internal/arena_structs_b.h

struct arena_s {

// [...]

/*

* bins is used to store heaps of free regions.

*

* Synchronization: internal.

*/

bin_t bins[NBINS];

// [...]

}

There is a bin for each size class. These bin_t contain extents (also called slabs in the context of small allocations) with free regions but also a list of full slabs.

// include/jemalloc/internal/bin.h

typedef struct bin_s bin_t;

struct bin_s {

// [...]

/*

* Current slab being used to service allocations of this bin's size

* class. slabcur is independent of slabs_{nonfull,full}; whenever

* slabcur is reassigned, the previous slab must be deallocated or

* inserted into slabs_{nonfull,full}.

*/

extent_t *slabcur;

/*

* Heap of non-full slabs. This heap is used to assure that new

* allocations come from the non-full slab that is oldest/lowest in

* memory.

*/

extent_heap_t slabs_nonfull;

/* List used to track full slabs. */

extent_list_t slabs_full;

// [...]

};

The slabcur field holds the last slab used that contains free regions. This field is used by jemalloc to speed up small allocations. If there are no more free regions in slabcur, the slab is inserted into the slabs_full list and another slab from slab_nonfull with free regions takes its place.

Extents, extents everywhere!

The main difference with the 'old' jemalloc is the use of extents instead of chunks. All allocations are backed by them (even jemalloc metadata). See this article3 for further information about jemalloc 'old'.

Jemalloc 'old' chunks had a fixed size of 2MB (on Android systems) whereas extents sizes can vary but are always a multiple of page size (4096 bytes). The memory reservation is done through mmap called in pages_map. Newly created extents are assigned a serial number (last extent's serial number + 1). These newly mapped extents can be split and merged depending on jemalloc needs. In case of split, children extents inherit their parent serial number. In case of a merge, the smallest serial number is kept. Two extents can be merged only if they are contiguous in memory and belong to the same arena.

References to all the created extents are kept in a global radix tree extents_rtree of type rtree_t. This tree is mostly used at the time of deallocation to find the extent corresponding to the pointer being freed.

Extents are used for two types of allocations:

- Small allocations (

<= 0x3800bytes): in this case extents are called slabs and contain several regions of the same size (the number of region depends on the size class). - Large allocations (

> 0x3800bytes): the extent contains a single allocation.

There is no huge allocations like in jemalloc 'old', these are large allocations.

// include/jemalloc/internal/extent_structs.h

struct extent_s {

uint64_t e_bits; // metadata about the extent: serial number, is it a slab, ...

void *e_addr; // pointer to actual mmaped memory

/*

* List linkage, used by a variety of lists:

* - bin_t's slabs_full

* - extents_t's LRU

* - stashed dirty extents

* - arena's large allocations

*/

ql_elm(extent_t) ql_link;

/*

* Linkage for per size class sn/address-ordered heaps, and

* for extent_avail

*/

phn(extent_t) ph_link;

union {

/* Small region slab metadata. */

arena_slab_data_t e_slab_data;

// [...]

};

}

Here are few noteworthy fields:

e_bits: extent metadatae_addr: pointer to actual mapped memoryql_linkandph_link: extents can be stored in lists and heaps,ql_linkcontains the list node of the current extent andph_linkthe heap nodee_slab_data: if the extent is a slab, this field contains slab metadata (mainly a bitmap describing free/allocated regions in this slab)

Note: For simplicity and readability, in these diagrams, data are depicted inside

extent_t. Metadata (extent_t) and actual data (result ofmmappointed by thee_addrfield), are not stored contiguously and can even be very far appart from each other.

Small allocations

An allocation is considered small if its size is <= 0x3800. In this case, extents are called slabs and each slab contains regions of the same size.

For each allocation size class, there is a corresponding slab/extent size. Assuming an allocation of size N in a slab of size M, the number of regions in the slab is M/N.

|

|

|

These sizes are computed from information contained in include/jemalloc/internal/size_classes.h as described in this comment:

// include/jemalloc/internal/size_classes.h

/*

* ...

* SIZE_CLASSES: Complete table of SC(index, lg_grp, lg_delta, ndelta, psz,

* bin, pgs, lg_delta_lookup) tuples.

* index: Size class index.

* lg_grp: Lg group base size (no deltas added).

* lg_delta: Lg delta to previous size class.

* ndelta: Delta multiplier. size == 1<<lg_grp + ndelta<<lg_delta

* psz: 'yes' if a multiple of the page size, 'no' otherwise.

* bin: 'yes' if a small bin size class, 'no' otherwise.

* pgs: Slab page count if a small bin size class, 0 otherwise.

* lg_delta_lookup: Same as lg_delta if a lookup table size class, 'no'

*

*/

#define SIZE_CLASSES \

/* index, lg_grp, lg_delta, ndelta, psz, bin, pgs, lg_delta_lookup */

SC( 0, 3, 3, 0, no, yes, 1, 3) \ // size == 8

SC( 1, 3, 3, 1, no, yes, 1, 3) \ // size == 16 (0x10)

SC( 2, 4, 4, 1, no, yes, 1, 4) \ // size == 32 (0x20)

SC( 3, 4, 4, 2, no, yes, 3, 4) \ // size == 48 (0x30)

SC( 4, 4, 4, 3, no, yes, 1, 4) \ // size == 64 (0x40)

...

Each size class has a corresponding index size class, first parameter of the SC macro (often called szind in the source code). The SIZE_CLASSES macro is used for large allocations as well. The formula to compute the size of allocations in a size class is as follow:

size == 1<<lg_grp + ndelta<<lg_delta

For instance on Android in a 64-bit process, the allocation size of the size class 1 is:

1<<3 + 1<<3 => 16

Note: Several

SIZE_CLASSESmacro are defined ininclude/jemalloc/internal/size_classes.hdepending on the underlying architecture. On Android aarch64 in a 64-bit process, theSIZE_CLASSESactually used is defined under the following conditions:# if (LG_SIZEOF_PTR == 3 && LG_TINY_MIN == 3 && LG_QUANTUM == 4 && LG_PAGE == 12)For a 32-bit process, replace

LG_SIZEOF_PTRwith 2 andLG_QUANTUMwith 3.

Extents/slabs keep track of free regions through e_slab_data containing a bitmap of available regions.

Large allocations

An allocation is considered large if its size is strictly greater than 0x3800. Like small allocations, large ones have size classes available in the same macro in include/jemalloc/internal/size_classes.h (look for size classes with the column bin with a no value).

#define SIZE_CLASSES \

/* index, lg_grp, lg_delta, ndelta, psz, bin, pgs, lg_delta_lookup */ \

...

SC( 35, 13, 11, 3, no, yes, 7, no) \ // last small alloc size class

SC( 36, 13, 11, 4, yes, no, 0, no) \ // first large alloc size class

\

SC( 37, 14, 12, 1, yes, no, 0, no) \

SC( 38, 14, 12, 2, yes, no, 0, no) \

...

Here a list of the first ones in a 64-bit process:

| # | Extent size | in tcache |

|---|---|---|

| 36 | 16384 (0x4000) | yes |

| 37 | 20480 (0x5000) | yes |

| 38 | 24576 (0x6000) | yes |

| 39 | 28672 (0x7000) | yes |

| 40 | 32768 (0x8000) | yes |

| 41 | 40960 (0xa000) | yes |

| 42 | 49152 (0xc000) | yes |

| 42 | 57344 (0xe000) | yes |

| 44 | 65536 (0x10000) | yes |

| 45 | 81920 (0x14000) | no |

| 46 | 98304 (0x18000) | no |

| ... | no | |

| 231 | 8070450532247928832 (0x7000000000000000) | no |

When a large allocation of size N is required, the closest size class M such that M >= N is selected. An extent of size M + 0x1000 is then claimed.

The actual base of the allocation (address sent back to the user), is randomized between the extent base and base + 0x1000 with a granularity of 0x40 bytes (see extent_addr_randomize in include/jemalloc/internal/extent_inlines.h).

For instance, if an allocation of 0x9000 bytes is made (i.e. malloc(0x9000)):

- The closest size class 0xa000 is selected.

- An extent of size 0xb000 (size class + 0x1000) is retrieved.

- A random pointer to the first page of this extent is returned, aligned on 64 bytes.

This is only true when an extent is extracted from a list of extents but not from a tcache (see tcache section). From a tcache, the base address is the same as before and not randomized again.

Extent selection algorithm

When a new slab or a large allocation is needed, an extent needs to be retrieved. The selection process follows these steps:

- The arena is retrieved from the thread local storage.

- Check if

arena->extents_dirtycontains a matching extent- On Android, find the oldest extent with the lowest address (described as a "first-fit" algorithm in the source code)

- Split the extent in two parts if it is too big

- Check if

arena->extents_muzzycontains a matching extent- Same process as

arena->extents_dirty

- Same process as

- Check if

arena->extents_retainedcontains a matching extent- Same process as

arena->extents_muzzy

- Same process as

- Otherwise, create a new extent using

mmap.

When an extent is created using mmap, its size depends on the value of the extent_grow_next field in the arena_t structure. extent_grow_next defines the index size class of the new created extent. After the extent creation, this value is incremented. The created extent will then be split into:

- A first extent of the requested size that will be used immediatly

- A second extent that will be placed in the

arena->extents_retainedheap.

The sz_pind2sz_tab global table, contains, for each index, the corresponding mmap size. As such, the easiest way to infer the new size, is to dump this table and to look at the index of extent_grow_next.

Here is an extract of this table:

var je_sz_pind2sz_tab = [

0x1000, 0x2000, 0x3000, 0x4000, 0x5000, 0x6000, 0x7000,

0x8000, 0xA000, 0xC000, 0xE000, 0x10000, 0x14000, 0x18000,

0x1C000, 0x20000, 0x28000, 0x30000, 0x38000, 0x40000,

0x50000, 0x60000, 0x70000, 0x80000, 0xA0000, 0xC0000,

0xE0000, 0x100000, 0x140000, 0x180000, 0x1C0000, 0x200000,

0x280000, 0x300000, 0x380000, 0x400000, 0x500000, 0x600000,

0x700000, 0x800000, 0xA00000, 0xC00000, 0xE00000, 0x1000000,

0x1400000, 0x1800000, 0x1C00000, 0x2000000, 0x2800000,

0x3000000, 0x3800000, 0x4000000, 0x5000000, 0x6000000,

0x7000000, 0x8000000, 0xA000000, 0xC000000, 0xE000000,

0x10000000, 0x14000000, 0x18000000, 0x1C000000, 0x20000000,

0x28000000, 0x30000000, 0x38000000, 0x40000000, 0x50000000,

0x60000000, 0x70000000, 0x80000000, 0xA0000000, 0xC0000000,

0xE0000000, 0x100000000, 0x140000000, 0x180000000,

0x1C0000000, 0x200000000, 0x280000000, 0x300000000,

0x380000000, 0x400000000, 0x500000000, 0x600000000,

// ...

]

Note: Before jemalloc 5.2.1, the extent was selected from

arena->extents_dirtyusing a best-fit algorithm (smallest matching size whatever the serial number). However, it seems that the implementation was leaking memory. Therefore it was replaced by the "first-fit" implementation in jemalloc 5.2.1 master repository (and not 5.2.0 as stated in the comment) and Android repository. See the comment below4.// ANDROID // The best-fit selection is reported to possiblity cause a memory leak. // This code has been completely removed from 5.2.0, so remove it from // our tree rather than risk a leak. // See https://github.com/jemalloc/jemalloc/issues/1454

Tcache

Besides the associated arena, each thread keeps in its local storage a tcache_t/struct tcache_s structure (include/jemalloc/internal/tcache_structs.h). To speed up the allocation process, some free allocations are kept in the tcache before they are made available to other threads .

When performing an allocation, jemalloc will look in bins_small for small allocations and bins_large for large allocations. If no entry is found, the allocator uses the arena bound to the thread. By default, on Android, only small allocations and large allocations with sizes <= 0x10000 are put in bins_large. The largest allocation size that can be put in the large tcache is defined by the global variable opt_lg_tcache_max (max_size = 1 << opt_lg_tcache_max).

// include/jemalloc/internal/tcache_structs.h

struct tcache_s {

// [...]

/* Drives incremental GC. */

ticker_t gc_ticker;

/*

* The pointer stacks associated with bins follow as a contiguous array.

* During tcache initialization, the avail pointer in each element of

* tbins is initialized to point to the proper offset within this array.

*/

cache_bin_t bins_small[NBINS];

// [...]

/* The arena this tcache is associated with. */

arena_t *arena;

/* Next bin to GC. */

szind_t next_gc_bin;

// [...]

/*

* We put the cache bins for large size classes at the end of the

* struct, since some of them might not get used. This might end up

* letting us avoid touching an extra page if we don't have to.

*/

cache_bin_t bins_large[NSIZES-NBINS];

};

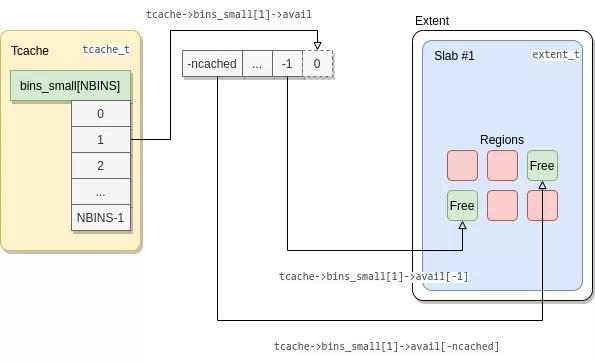

Each entry of bins_small/bins_large corresponds to a specific allocation size class and is a type cache_bin_t (include/jemalloc/internal/cache_bin.h).

// include/jemalloc/internal/cache_bin.h

typedef struct cache_bin_s cache_bin_t;

struct cache_bin_s {

/* Min # cached since last GC. */

cache_bin_sz_t low_water;

/* # of cached objects. */

cache_bin_sz_t ncached;

// [...]

/*

* Stack of available objects.

*

* To make use of adjacent cacheline prefetch, the items in the avail

* stack goes to higher address for newer allocations. avail points

* just above the available space, which means that

* avail[-ncached, ... -1] are available items and the lowest item will

* be allocated first.

*/

void **avail;

};

In cache_bin_s, avail is a dynamically allocated stack containing addresses and is always pointing to the end of the allocated buffer. The start address of the avail buffer can be retrieved with the max number of elements ncached_max : start_avail = &avail[-ncached_max]. This stack is negatively indexed so the first entry (most recently added) is located at avail[-ncached] and the last entry (least recently added) at avail[-1].

When an allocation is popped from the tcache, the entry at avail[-ncached] is returned and ncached is decreased. When an allocation is freed and pushed to the tcache, ncached is increased and the address is stored at avail[-ncached].

Note: each thread has its own tcache and is associated to an arena, but its tcache can contain pointers allocated from extents or slabs of other arenas.

For each cache bin size class, a maximum number of cached objects is allowed and stored in the global array tcache_bin_info of type cache_bin_info_t.

// include/jemalloc/internal/cache_bin.h

typedef struct cache_bin_info_s cache_bin_info_t;

struct cache_bin_info_s {

/* Upper limit on ncached. */

cache_bin_sz_t ncached_max; // int32_t

};

Flush

The memory is not infinite and cached memory should be made available again to other threads if it is too old. This is the reason why tcaches->bins_{small,large} need to be flushed. This can be triggered by 2 events:

tcache->bins_XXX[i].ncachedreachestcache_bin_info[i].ncached_max, the cache is full.tcache->g_ticket.tickstarts with the valuetcache->g_ticket.nticksand is decreased each time atcacheoperation is performed. Whentcache->g_ticket.tickreaches 0, a garbage collection is triggered: the bin pointed by the indextcache->next_gc_binis flushed andtcache->next_gc_binis increased modulo the total number of bins (tcache_event_hardin src/tcache.c).

tcache bins flushes are performed in two ways depending on the event triggering them:

- Cache full: remove half of the oldest entries from the cache

- GC triggered: remove 3/4 of the oldest entries from cache below the value

tcache->bins_XXX[i].low_waterandlow_watertakes the value ofncached

When a tcache is flushed:

- Small allocation objects (i.e. regions) are put back to their corresponding

arena->bin[i]and marked as free. - Large allocation objects (i.e. extents) are put back to the heap of available extents in their arena (the

arena->extents_dirtyheap).

Memory usage

Allocation

Small allocations

Assuming szind the index class size, the process jemalloc uses to allocate a small region is as follows:

- Look for a matching region directly in

tcache->bins_small[szind]heap - Look for a slab with a matching free region in

arena->bins[szind]heap - Create a new slab from an extent

- Look for an extent in extents heaps

arena->extents_dirtyarena->extents_muzzyarena->extents_retained

- Create a new extent if no extent is available

- split the extent/slab into regions and put it in

arena->bins[szind]

- Look for an extent in extents heaps

Large allocations

Assuming szind is the index class size, the process jemalloc uses to perform a large allocation is as follows:

- If the size is

<= 0x10000, look for a matching large alloc directly intcache->bins_large[szind]heap. - Look for an extent in extents heaps

arena->extents_dirtyarena->extents_muzzyarena->extents_retained

- Create a new extent if no extent is available.

Free

When freeing the pointer ptr, the process is the following:

- Look for the extent in which

ptrbelongs. - Find the corresponding size class index

szindfor this extent. - If its a small size class (<= 0x3800)

- put

ptrinto thetcache->bins_small[szind]cache - if the cache bin is full, flush it

- put

- If its a large size class and its size is

<= 0x10000- put

ptrinto thetcache->bins_large[szind]cache - if the cache_bin is full, flush it

- put

- If its a large size class and its size is

> 0x10000- get the

ptrextent and put it back to the heap of available extents,arena->extents_dirty

- get the

Conclusion

Jemalloc 'new' internals are quite complex and use a lot of different structures to increase speed and minimize memory fragmentation in a multithreaded process.

From an attacker point of view, here are a few takeaways:

- When exploiting heap overflow vulnerabilities, as opposed to scudo, there is no canaries between allocations. Depending on the allocation size, the adjacent region or extent can be overwritten. However, the exploitation of such vulnerabilities on large allocations is hindered by the random offset added by jemalloc.

- When exploiting use-after-free vulnerabilities, the tcache mecanism makes it easy to reuse an allocation on the same thread. However, this becomes tricky when trying to reuse an allocation from another thread as the tcache needs to be flushed and the two threads need to share the same arena.

- Another significant behavior to take into account is the lack of extent unmapping, the

opt_retainoption being set to true by default.

The author would like to thank his colleagues at Synacktiv, Clément Berthaux and Jean-Baptiste Cayrou, for their feedback and support throughout the writing process of this post.